Introducing Virtlet: VMs and Containers on one OpenContrail network in Kubernetes -- a new direction for NFV?

They need to run their single application workload in large scale production.

In other words, they don't need multi-tenancy, self-service, Murano, Trove, and so on. In fact, they don't even want OpenStack, because it is too complex to ship an immutable VM image with their app.

On the other hand, running Kubernetes instead of OpenStack wasn't the right answer either, because their app is not ready to take its place in the microservices world, and it would take at least six months to rewrite, re-test and certify all the tooling around it.

That was the day I realized how powerful it would be to enable standard VMs in Kubernetes, along with the same SDN we have today in OpenStack. By including the best of both platforms, imagine how we could simplify the control plane stack for use cases such as Edge Computing, Video streaming, and so on, where functions are currently deployed as virtual machines. It might even give us a new direction for NFV.

That's the idea behind Virtlet.

What is Virtlet? An overview

The previous real example demonstrates that our customers are not ready for the pure microservices world, as I described in my previous blog. To solve this problem, we're adding a new feature to Mirantis Cloud Platform called Virtlet. Virtlet is a Kubernetes runtime server that enables you to run VM workloads based on QCOW2 images.Virtlet was started by Mirantis k8s folks almost year ago, with the first implementation done with Flannel. In other words, Virtlet is a Kubernetes CRI (Container Runtime Interface) implementation for running VM-based pods on Kubernetes clusters. (CRI is what enables Kubernetes to run non-Docker flavors of containers, such as Rkt.)

For the sake of simplicity of deployment, Virtlet itself runs as a DaemonSet, essentially acting as a hypervisor and making the CRI proxy available to run the actual VMs This way, it's possible to have both Docker and non-Docker pods run on the same node.

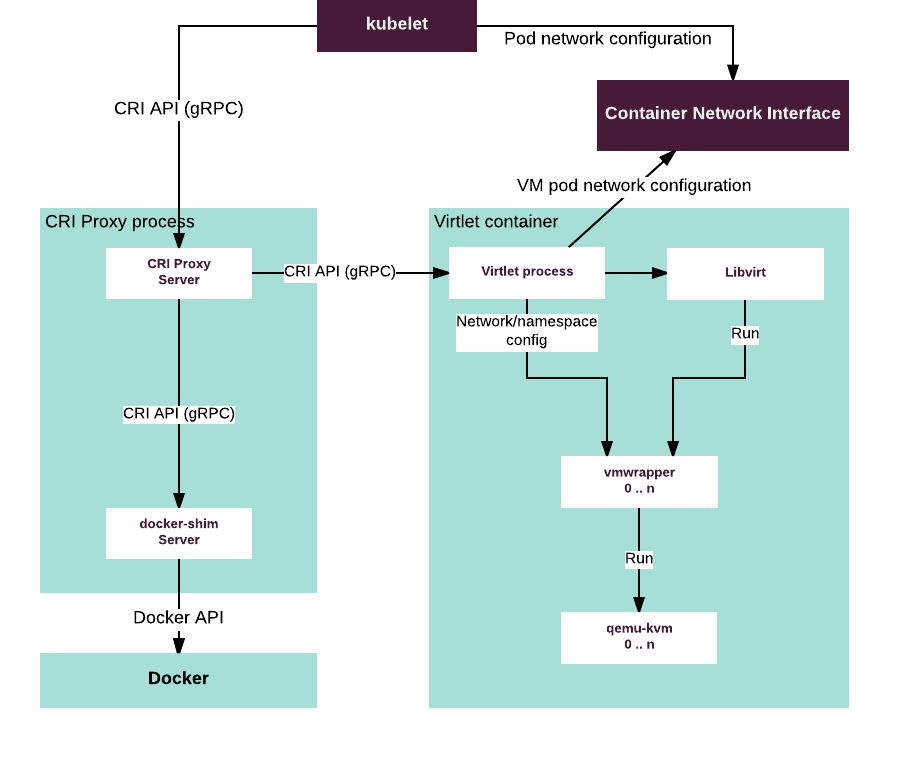

The following figure shows the Virtlet architecture:

Virtlet consists of the following components:

- Virtlet manager: Implements the CRI interface for virtualization and image handling

- Libvirt: The standard instance of libvirt for KVM.

- vmwrapper: Responsible for preparing the environment for the emulator

- Emulator: Currently qemu with KVM support (with possibility of disabling KVM for nested virtualization tests)

- CRI proxy: Provides the possibility of mixing docker-shim and VM based workloads on the same k8s node

- Volumes: Virtlet uses a custom FlexVolume (virtlet/flexvolume_driver) driver to specify block devices for the VMs. It supports:

- qcow2 ephemeral volumes

- raw devices

- Ceph RBD

- files stored in secrets or config maps

- Environment variables: You can define environment variables for your pods, and then virtlet uses cloud-init to write those values into the /etc/cloud/environment file when the VM starts up.

Demo Lab Architecture

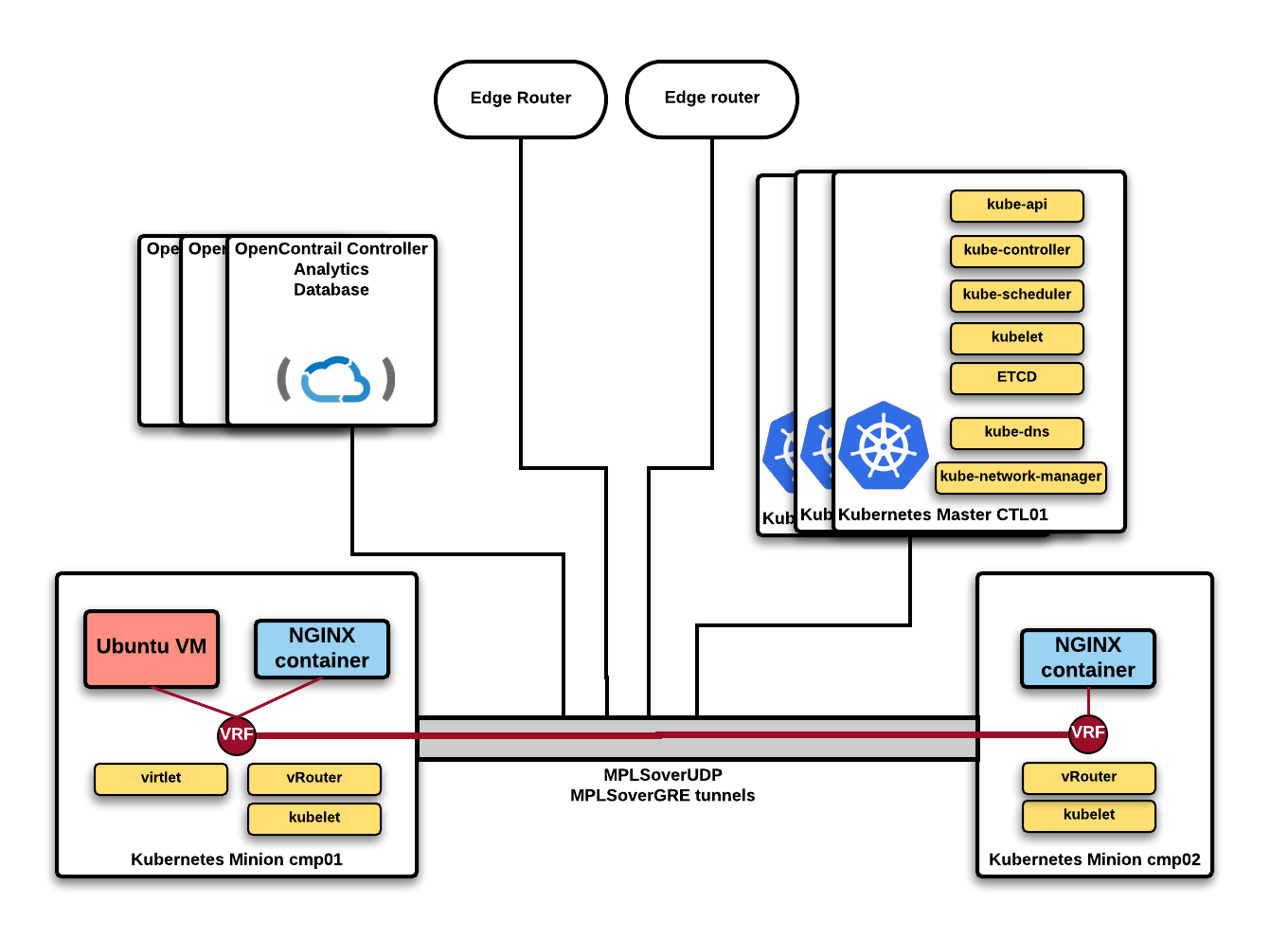

To demonstrate how all of this works, we created a lab with:- 3 OpenContrail 3.1.1.x controllers running in HA

- 3 Kubernetes master/minion nodes

- 2 Kubernetes minion nodes

So what we wind up with is an installation where we're running containers and virtual machines on the same Kubernetes cluster, running on the same OpenContrail virtual network.

In general, the process looks like this:

- Set up the general infrastructure, including the k8s masters and minions, as well as an OpenContrail controllers. Nodes running the Virtlet DaemonSet should have a label key set to a specific value. In our case, we're using extraRuntime=virtlet. (We'll need this later.)

- Create a pod for the VM, specifying the extraRuntime key in the nodeAffinity parameter so that it runs on a node that's got the Virtlet DaemonSet. For the volume specify the VM image.

- That's it; there is no number 3.

[youtubevideo url="https://youtu.be/Q4QXvXu6imk"]

Conclusion

So now that we've got the basics, we've got a couple of ideas of what we would like to do in the future regarding Virtlet and OpenContrail Kubernetes integration, such as:- Performance validation of VMs in Kubernetes, such as comparing containerized VMs with standard VMs on OpenStack

- iSCSI Support for storage volumes

- Enabling OpenContrail vRouter DPDK and SR-IOV, extending the OpenContrail CNI to make it possible to create advanced NFV integrations

- CPU pinning and NUMA for Virtlet

- Resource handling improvements, such as hard limits for memory, and qemu thread limits

- Calico Support