Do I need OpenStack if I use Docker?

Docker has broken a record in the speed in which it moved from being a disruptive technology to a commodity. The speed of adoption and popularity of Docker brings with it lots of confusion.

In this post I wanted to focus on a trend of commentary that has been gaining popularity that I’ve started to hear more often recently from users who just started using Docker: whether it makes sense to use OpenStack if they’ve already chosen to use Docker.

Before I give my take on this, I want to start with a short background for the rationale behind this question.

Background

In its simplest form, Docker provides a container for managing software workloads on shared infrastructure, all while keeping them isolated from one another. Virtual machines such as KVM do a similar job by creating a complete operating system stack of all the OS devices (through a hypervisor). However, unlike the virtual machine approach, Docker relies on a built-in feature of the Linux operating system named LXC (Linux containers).* LXC utilizes the built-in operating system features of process isolation for memory, and to a lesser degree, CPU and networking resources. Docker images do not require a complete boot of a new operating system, and as a result, provide a much lighter alternative for packaging and running applications on shared compute resources. In addition, it allows direct access to the device drivers which makes I/O operations faster than with a hypervisor approach. The latter makes it possible to use Docker directly on bare metal which, often times causes people to ask whether the use of a cloud such as OpenStack is really necessary if they’re already using Docker.

This performance difference between Docker and a hypervisor such as KVM is backed by a recent benchmark done by Boden Russell and presented during the recent DockerCon event.

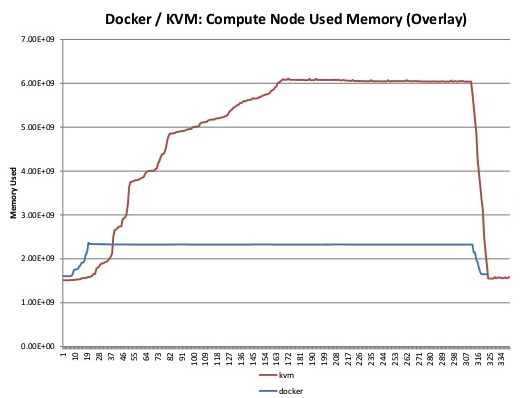

The benchmark is fairly detailed, and as expected, it shows a significant difference between the time it takes to boot a KVM hypervisor to a Docker container. It also indicates a fairly big difference in the memory and CPU utilization between the two, as can be seen in the diagram below.

This difference in performance can map to a difference in density and overall utilization difference between the two in similar proportion. This difference can easily map into a big difference in cost that is directly affected by the number of resources needed to run a given workload.

My take

- This question has nothing specific to do with OpenStack and can be applied similarly to any other cloud infrastructure. The reason it is often brought up in the context of OpenStack, in my opinion, is due to the fact that OpenStack is fairly popular in private cloud environments which is the only environment in which we can even consider a pure Docker alternative.

- It's all about the hypervisor!

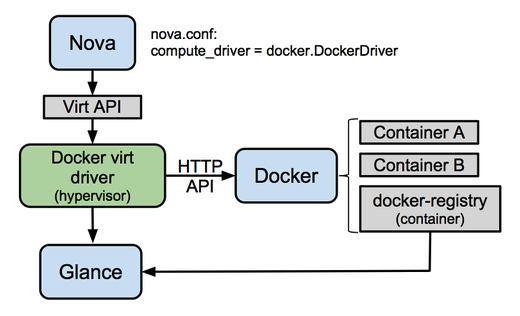

Many of the performance benchmarks compare Docker versus KVM, and have little to do with OpenStack. In fact, this specific benchmark of both KVM images and Docker containers was running through OpenStack which shows that the two technologies works nicely together. In that context, most of the utilization arguments become irrelevant when I choose to run OpenStack on top of a Docker based Nova stack as illustrated in the diagram below taken from the OpenStack documentation.

- Cloud infrastructure provides a complete data center management solution in which containers or hypervisors, for that matter, are only part of a much bigger system. Cloud infrastructure such as OpenStack includes multi-tenant security and isolation, management and monitoring, storage and networking and more. All of those services are needed for any cloud / data center management and have little dependency on whether Docker or KVM are being used.

- Docker isn't (yet) a full-featured VM and has some serious limitations around security, is lacking in Windows support (as indicated in the following email thread), and therefore cannot be considered a complete alternative to KVM just yet. While there’s ongoing work to bridge those gaps, it is safe to assume that adding the missing functionality may come at an additional performance cost.

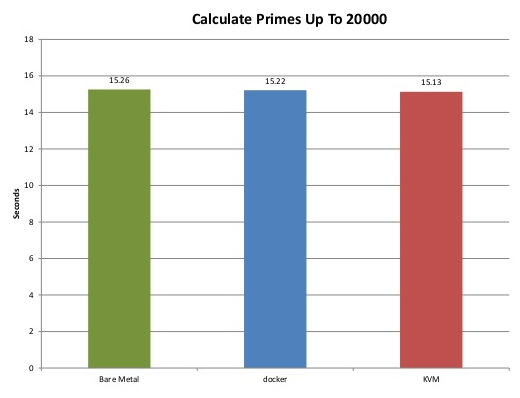

- There’s a big difference between raw hypervisor performance/containerization and application performance, as indicated in the following graphs from the benchmark results. A possible explanation for that is that applications often use the caching technique to reduce the I/O overhead.

- If we package Docker containers within a KVM image the difference can become negligible. This architecture often uses hypervisors for managing the cloud compute resources, and an orchestration layer such as Heat, Cloudify, or Kubernetes on top to manage containers within the hypervisor resources.

Conclusion

This brings me to the conclusion that the right way to look at OpenStack, KVM and Docker, is as a complementary stack in which OpenStack plays the role of the overall data center management. KVM as the multi-tenant compute resource management, and Docker containers as the application deployment package.

In this context a common model would be to use Docker for the following roles:

- Docker provides deterministic software packaging and fits nicely with the immutable infrastructure model.

- Docker for excellent great containerization of microservices PODs

- Using Docker on top of OpenStack as well as a bare metal environment

Having said all of the above, I do see cases mostly for well-defined workloads, where the use of cloud infrastructure isn't mandatory. For example, if I were to consider the automation of a small shop development and testing environment for DevOps purposes, I would consider using Docker directly on a bare metal environment.

Orchestration can be a great abstraction tool between the two environments.

One of the benefits of using an orchestration framework with Docker, is that it can allow us to switch between OpenStack or bare metal environments at any given point in time. In this way, we can choose either options just by pointing our orchestration engine to the target environment of choice. OpenStack Orchestration (Heat) declared support for Docker orchestration starting from the Icehouse release. Cloudify is an open source TOSCA based orchestration that works on OpenStack and other clouds such VMware, AWS and bare metal, and recently included Docker orchestration. Google Kubernetes is associated mostly with GCE but could be customized to work with other cloud or environments.

* As of version 0.9, the default container technology for Docker is its own libcontainer rather than LXC, but the concept is the same.

This article originally appeared at OpenSource.com.