Making the most of your application performance on OpenStack Cloud

In one of my previous posts I outlined some considerations when deploying OpenStack and different approaches to distribution of OpenStack services across hardware to provide HA. However, it is not the OpenStack services themselves that drive the value of going to cloud. I mean, even once high-availability of OpenStack services is assured, the instances and storage will not perform optimally if certain hardware requirements are not met.

In other words, capacity is yet another critical planning area–perhaps even more fundamental than HA. Care should be taken that users’ instances will never be slowed by lack of compute resources (proper overprovisioning); that users will never run out of space for their snapshots; and that volumes will be always available, reliable, and fast. Some of these aspects have nothing to do with OpenStack itself, but with its underlying components, such as the hypervisor, servers, and network parameters.

In this post I have decided to cover what I had neglected previously: performance considerations. Here, I will focus solely on the compute part of OpenStack, which is composed of:

- Nova (instance virtualization),

- Cinder (block storage), and

- Glance (instance snapshots & image repository).

In our experience at Mirantis, Nova, Cinder, and Glance are typical weak points hit by people running OpenStack. Lots of the concerns depend on predicted types of workloads. In the case of a cloud, especially a public cloud, I can do only rough estimates, as use cases depend largely on the client's needs.

Get to know your potential workload

There's no doubt, IaaS has revolutionized IT in many ways by providing dynamic resources, rapid scalability, and developer enablement. However, this does not mean that the IaaS cloud is a panacea for any sort of problem. Some applications are simply not suitable to run in the cloud; for example, apps that cannot scale horizontally, which means they rely on one instance only.

OpenStack does not provide any way to assure HA of instances. Implementing such features requires shared storage and integrated instance monitoring, which is basically not there yet. So people designing an app for OpenStack should always plan for failure of a single instance and have more than one of everything. This obviously poses a problem for application design.

Choose the right proportions for OpenStack compute resources

Compute nodes are the workhorses that run instances. Performance of hypervisors can be negatively affected by the following factors (in order of importance):

- I/O: Since with OpenStack all the instances have their filesystems stored as files on the hypervisor’s local disk, it is obvious they all fight for access to it. It becomes a scarcer resource as the number of cores per server (and also instances per server) increases. After more than 10 years as a sysadmin, I've found that this barrier was always hit first unless you used some enterprise storage with high I/O rates. This has changed, however, in the last couple of years, with the advent of SSD drives where IOPS have gone up dramatically. Equipping compute nodes with a fast SSD seems to be a must.

- RAM: Unless you run an OpenStack installation for purposes other than HPC computations, this is the second barrier you are likely to hit. From my experience it turns out that most applications are memory bound rather than using the CPU. Very frequently I have encountered hypervisors where the memory was being swapped in and out, while the CPUs were underutilized.

- CPU: The last performance barrier is the CPUs, which seem to get their hits at the very last.

Some precautions can be taken by sysadmins to ensure proportional resource consumption by instances:

- flavors

- quotas

- overprovisioning

The user’s ability to define the hardware parameters of an instance is limited in OpenStack. These limits are imposed by so-called flavors. A flavor is an allowed combination of hardware resources given to an instance. This can be, for example, "1 CPU, 2 GB RAM , 20 GB HDD." System administrators can adjust flavors to be properly aligned with the underlying hardware.

For example, if we have a compute node with 32 GB of RAM, 8C PU cores, and 240 GB HDD, we will surely not define a flavor like this: “1 CPU, 24 GB RAM, 120 GB HDD,” as it would eat up most of RAM, half of the HDD, and leave the CPUs underutilized. It would be good here to define the following flavors to reflect the ratio of CPU/RAM/HDD:

- 1 CPU, 4 GB RAM, 32 GB HDD

- 2 CPU, 4 GB RAM, 64 GB HDD

- etc.

Quotas can be used to limit the number of resources used by a particular tenant: number of instances, block volume number and space, or number of snaphsots and images kept in Glance. To properly design quotas, one should think about the potential number of tenants the cloud is going to have and match this number against available hardware.

Remember that if you happen to have more tenants than initially you designed for, you expose yourself to a situation where you might be forced to decrease their quotas or quickly add new hardware. This is could be painful if you have already been paid you for the SLA.

Lots of cloud instances run underutilized. Given this, it is common for cloud administrators to overprovision resources for better TCO. Overprovisioning is facilitated by technologies such as hyperthreading built into modern CPUs, memory deduplication, storage deduplication, and thin provisioning. On the OpenStack side, one can overprovision resources by adjusting scheduler filters and making compute nodes report more resources than they actually have. Overprovisioning always seems to be asking for trouble. Still, the truth is...everybody does it.

There is also the question of small or big compute nodes. With big compute nodes (48 CPUs, lots of storage and RAM) you definitely save precious space in the datacenter. KVM instances, however, are just plain Linux processes with all that implies. Many instances per compute node imply more IO wait as we have more instances fighting for access to the disk. The same applies to network bandwidth. Also, the time spent by the CPU scheduling KVM processes increases (reflected in "system CPU"). Of course nothing prevents us from acting like this, but only for testing purposes ;-)

Choosing the right hypervisor for OpenStack

While there are many flame wars about which hypervisor is “the best,” the real answer is: it depends. The truth about different hypervisor support in OpenStack is that if you want to everything running fairly smoothly, then use KVM—period. While there are a bunch of other hypervisor drivers available – including Xen, HyperV, and ESX – KVM remains the one on which all the new features are tested and implemented. It also gets the most support on the user forums (which is not meaningless given the still square nature of OpenStack in some areas).

There is another important consideration: the types of guests you're planning on. KVM is best suited to hosting Linux guests (though running Windows is still possible). If you're only planning on running Windows, it might pay to go for the HyperV driver, even though the code has only been around for a couple of months.

Hypervisor and guest optimizations

I don’t feel confident enough to provide guidelines here for all the hypervisors supported by OpenStack. Below is a short list of KVM settings that can improve guest performance in some way. Of course, all of these improvements mean nothing faced with excessive overprovisioning.

Tuning the hypervisor:

- Change the kernel I/O scheduler to “Deadline.”

- Enable Hugepages and preallocate them at boot time.

- Use KSM (kernel same-page merging) to save memory.

- Load vhost_net module.

- Turn on hyperthreading

- Place guest filesystems directly on hypervisor block devices instead of in files (Folsom comes with an ability to use LVM logical volumes for this purpose).

On guests:

- Use virtio drivers for network and storage (virtio_net, virtio_blk).

Choosing the right compute node

Given the above requirements, we recommend buying cheap rack servers as compute nodes. CPUs should provide HVM extensions like AMD-V or IntelVT. Also SSD is an essential requirement. NIC bonding is not necessary. As for the CPU/RAM/HDD/NET ratio—it is a question of proper workload estimation (see above).

Choosing the right user data storage

In OpenStack (excluding Swift) user data is kept in three places:

- The compute node (Nova): This is where the user instance runs.

- The block storage node (Cinder): This is the store for block volumes that can be attached to instances as additional block devices via iSCSI.

- The image repository (Glance): This is a place where user images and instance snapshots are stored.

A compute node failure is not critical if one has another copy of the instance running on another compute node or an up-to-date snapshot put into Glance. However, one can imagine a situation where an instance fails and there is no backing from the Glance side. So, it turns out that data kept in Glance is critical, as it is the last resort to recover user instances.

The same applies to block volumes and Cinder. The aim of Cinder is to provide storage for critical data. Typically this data is far too precious to be kept on the compute’s local filesystem. Block storage has its own dedicated server and is exposed to the instance via iSCSI. (What the instance sees is just a block device. In fact, all the data written to this device goes to the block storage server where Cinder runs.). If we lose data on the storage server, then our users might just transparently lose their databases or some equally important data.

Given the above, it becomes obvious that both Glance and Cinder data should be stored in a reliable way. They must meet different performance characteristics, though.

For Glance, it is enough if it just provides a relatively huge amount of storage. Performance is not critical here. The huge space requirement is because many cloud users seem to rely heavily on instance snapshots as a way of cloning and backup. Glance comes with a couple of storage backends, including Swift, which seems to tackle the space problem in a cost-effective way.

For Cinder, it’s all about data accessibility and speed. Cinder exports block devices via iSCSI, which means that instances see just a SCSI disk on their side. If the instance has no access to a SCSI device for a given threshold of seconds, this typically results in a SCSI timeout and the device being remounted read only, etc. Such inaccessibility can be driven by a loss of the volume node itself or a network connection cut.

Another requirement for the Cinder node is a potentially big number of iSCSI clients writing and reading pieces of data at the same time (just imagine having a hundred volumes with databases stored on one server). The volume node needs to provide a high level of IOPS here.

We recommend the following guidelines for the volume node:

- Data volumes based on SSD drives in RAID5 with spares or RAID1.

- Two NICs /w Bonding/LACP for network reliability and speed.

Typically it is enough to buy a storage server that can hold a large number of disks (the Supermicro storage line of servers is a good example).

Networking Capacity in OpenStack

In our setup we have three different types of traffic:

- instance traffic,

- block storage traffic, which means iSCSI, and

- management traffic, the communication of OpenStack components and transfers of instance snapshots to and from Glance.

Each of these has different characteristics. For smaller scale deployments, all of them can be separated logically by VLANs, but physically share one wire. For larger deployments we recommend dedicating each type to a different physical connection.

Instance traffic is diverse and unpredictable. To ensure proper throughput we recommend having at least 1 GB/s from the node to the TOR switch, and 10 GB/s uplinks from the switch to the “world.” This should scale up to several hundred instances, provided that you run about 10 per compute node.

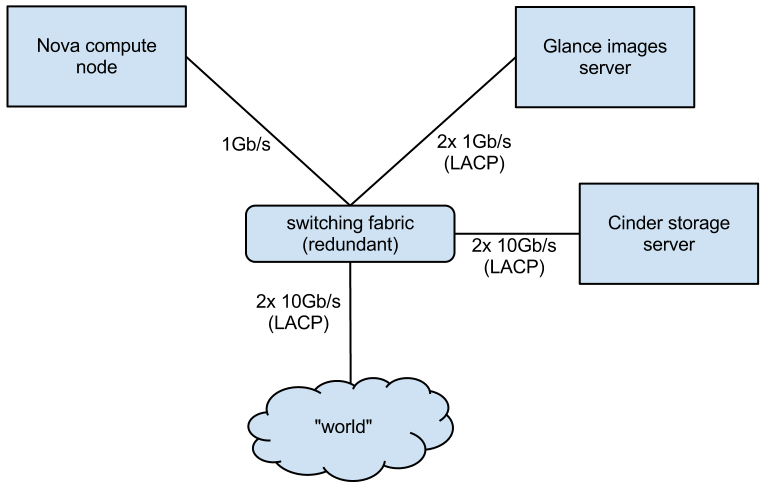

Since block storage needs to be “always available and fast,” we recommend bonding two interfaces with independent data paths. 10GB/s interfaces should be considered as the link to the block storage node. It is also recommended to put iSCSI traffic on a separate wire and turn on some iSCSI optimizations on the switch, Cinder node itself, and compute nodes as well (e.g., jumbo frames, txqueuelen).

Glance doesn’t really need to perform at close-to-realtime speeds with heavy I/O rates. This is because all instance templates get cached locally on compute nodes. So they all need to be fetched only once from Glance. Also the process of transferring snapshots back and forth does not seem to demand cosmic speeds. It would be enough to put it on a 2x1 GB/s bond interface. The picture below shows a summary of network speeds proposed above.

[caption id="attachment_160490" align="aligncenter" width="500"] Making the most of OpenStack Cloud Performance[/caption]

Making the most of OpenStack Cloud Performance[/caption]

Avoid “enterpriseness” and shared resources

Very often customers ask us what you get with OpenStack compared to enterprise class virtualization solutions like vCloud. In fact, vCloud has lots of advanced features to provide instance live migrations, failure detection, and HA. This allows cloud users to deploy their apps in a really comfortable way. They don't need to plan for failures, as vCloud will automatically track their servers and take appropriate actions when different elements of the vCloud infrastructure happen to go down.

While these features seem really cool at the very first sight, they also come with some tradeoffs. One of the building blocks of vCloud is shared storage. To be reliable it usually needs to come from some kind of SAN infrastructure, which consists of a storage array, iSCSI, or FiberChannel networks. At a certain scale where you're running hundreds of hosts, such a SAN becomes a single point of failure and scalability. To maintain proper I/O rates, we need to make it really fast. Unsurprisingly, this increases our cash spent on storage support contracts (which can reach millions of dollars).

With OpenStack it is a totally different story. By default, its architecture is “shared nothing,” which means almost linear scalability of compute and block storage servers. Each compute node has independent, local storage. We have block live migration for KVM and Xen to move instances around with no disk array underneath.

Most components such as API services, scheduler, etc., are stateless and can be scaled by simply adding more processes. Block storage can be expanded by just buying more storage nodes. Glance is expanded by adding storage nodes to underlying Swift installation. And Swift is obviously all about a cheap, shared-nothing PC cluster.

Some of the tradeoff here, however, is thrown onto the users. They are required to think about the compute resources as "not guaranteed"–different compute nodes can fail any time. And also, there is no built-in infrastructure to monitor them.

Summary

I've hit some of the major issues you need to consider when planning a production grade cloud. These should not be treated as the ultimate truth, as every cloud is different and has diverse user demands. But some assumptions remain true, no matter what you deploy:

- OpenStack is not suitable to run any type of workload.

- You can get away with losing a couple of compute nodes.

- You cannot get away with losing user instance images and snapshots.

- You cannot get away with losing user vital data placed on block storage nodes.

- You cannot get away with block storage performing poorly (remember SCSI timeouts?).

- You cannot get away with reducing user quota driven by excessive overprovisioning of hardware and tenants.

- You cannot scale properly with shared resources.