High Availability (HA) for OpenStack Platform Services MySQL + rabbitMQ

Oleg Gelbukh's last blog article gave us a bird's eye view of different aspects of high availability in OpenStack. Even though all OpenStack components are designed with high availability in mind, the software still relies on external software, such as the database and the messaging system. It is left up to the user to deploy them in a fail-proof manner.

It is very important to bear in mind that everything stateful in OpenStack is done via the message system and the database, and all other components are stateless (excluding Glance). The database and message system in OpenStack are its heart and veins. While the queue system lets numerous components communicate, the database holds the cluster state. Both of them take part in every user request, whether it's displaying a list of instances or spawning a new vm.

The default for messaging is RabbitMQ and the default database is MySQL. They are known workhorses in the industry and in our experience they generally suffice even for large deployments in terms of scalability. In theory, any database supporting SQLAlchemy will do, but most users stick with the default. For messaging, there is no real alternative to RabbitMQ, although there are people working on a ZeroMQ driver for OpenStack.

How the messages and database work in OpenStack

Let's first consider how the database and message system components act together in OpenStack. To do this, I will describe the flow of the most popular user request: provisioning an instance.

The user submits his request to OpenStack by interacting with the nova-api component. Nova-api processes the instance creation request by invoking the create_instance function from nova-compute API. The function does the following:

- It verifies user input: (e.g., checks if the requested vm image, flavor, networks exist). If they are not specified, it tries to get the defaults (e.g., default flavor, network).

- It checks the request against user quotas.

- After positive verification of the above, it creates an entry for the instance in OpenStack db (

create_db_entry_for_new_instancefunction). - It calls the

_schedule_run_instancefunction, which passes the user request to the nova-scheduler component via the message queue, using AMQP protocol. The body of this request contains the instance parameters:request_spec = {The

'image': jsonutils.to_primitive(image),

'instance_properties': base_options,

'instance_type': instance_type,

'num_instances': num_instances,

'block_device_mapping': block_device_mapping,

'security_group': security_group,

}_schedule_run_instanceends by actually sending the message to AMQP with invocation of thescheduler_rpcapi.run_instancefunction.

Now the scheduler takes over. It receives the message with host specs, and based on them and its scheduling policies, it tries to find an appropriate host to spawn the instance. This is an excerpt from the log files of the nova-scheduler when this is being done (in this example FilterScheduler is used):

Host filter passes for ubuntu from (pid=15493) passes_filters /opt/stack/nova/nova/scheduler/host_manager.py:163

Filtered [host 'ubuntu': free_ram_mb:1501 free_disk_mb:5120] from (pid=15493) _schedule /opt/stack/nova/nova/scheduler/filter_scheduler.py:199

It chooses the host with the least cost by applying a weighting function (there is only one host, so weighting does not change anything here):

Weighted WeightedHost host: ubuntu from (pid=15493) _schedule /opt/stack/nova/nova/scheduler/filter_scheduler.py:209

After the proper compute host has been determined to run the instance, the scheduler invokes the cast_to_compute_host function, which:

- updates the "host" entry for the instance in the nova database (host = the compute host on which the instance will be spawned) and

- sends a message via AMQP to nova-compute on this specific host to run the instance. The message includes the UUID of the instance to run and next action to take, which is:

run_instance.

In response, nova-compute on the chosen host calls the method _run_instance, which gets the instance parameters from the db (based on the UUID it was passed) and launches an instance with the appropriate parameters. During the course of the instance setup, nova-compute also communicates via AMQP with nova-network in order to set up all the networking (including IP address assignment and DHCP server setup). At various stages of the spawning process, the state of the VM is saved to the nova db, using the function _instance_update.

We can see that when communication between different OpenStack components is involved, AMQP is used. Also, the database is updated several times to reflect the VM provisioning state. So if we lose any of these components, we would severely disrupt basic functions of the OpenStack cluster:

- A RabbitMQ loss will render us unable to perform any user tasks. Also some resources (like VMs being spawned) will be left in disrupted state.

- A database loss will cause even more disastrous effects: While all instances will be running, we will not be able to tell who they belong to, what host they have landed on, or what IP addresses they have. Taking into account that you might be running a cloud-scale number of instances (perhaps several thousand), this situation would be unrecoverable.

HA solutions for the database

You can prevent a database crash by careful backup and data replication. In the case of MySQL, many well-documented solutions exist, including MySQL Cluster (an “official” MySQL clustering suite), MMM (multimaster replication manager), and XtraDB from Percona.

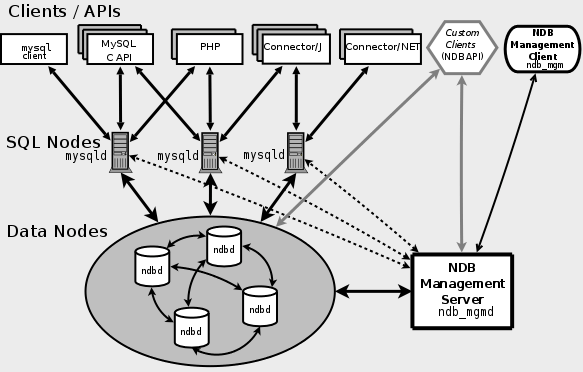

MySQL Cluster

MySQL cluster relies on a special storage engine called NDB (Network DataBase). The engine is a cluster of servers called "data nodes," governed by the "management node." Data is partitioned and replicated between the data nodes and at least two replicas exist for a given piece of data. All replicas are guaranteed to reside on different data nodes. On top of the data nodes, a farm of MySQL servers runs configured with NDB storage on the backend. Each of the mysqld processes holds read/write capabilities and they can be load balanced for efficiency and high availability.

[caption id="attachment_109298" align="aligncenter" width="583"] MySQL Cluster architecture[/caption]

MySQL Cluster architecture[/caption]

MySQL Cluster guarantees synchronous replication, which is an obvious flaw of the traditional replication mechanism. It has some limitations compared to other storage engines (this link presents a good overview of them).

XtraDB Cluster

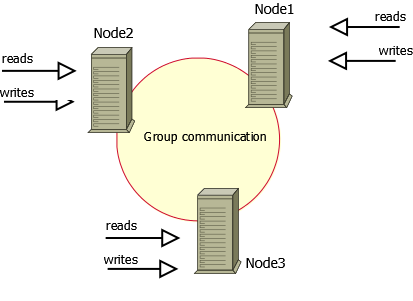

This is a solution from the highly acclaimed company Percona. XtraDB Cluster consists of a set of nodes, each of which runs an instance of Percona XtraDB with a set of patches to support replication. The patches introduce a set of hooks into the InnoDB storage engine and allow it to build a replication system underneath, which follows the WSREP specification.

[caption id="attachment_109299" align="aligncenter" width="413"] XtraDB Cluster architecture[/caption]

XtraDB Cluster architecture[/caption]

Each cluster node runs a patched version of mysqld from Percona. Also, each of them holds a full copy of the data and is available for read/write operations. Replication is synchronous. Just as with MySQL Cluster, XtraDB Cluster also suffers from some limitations, which are described here.

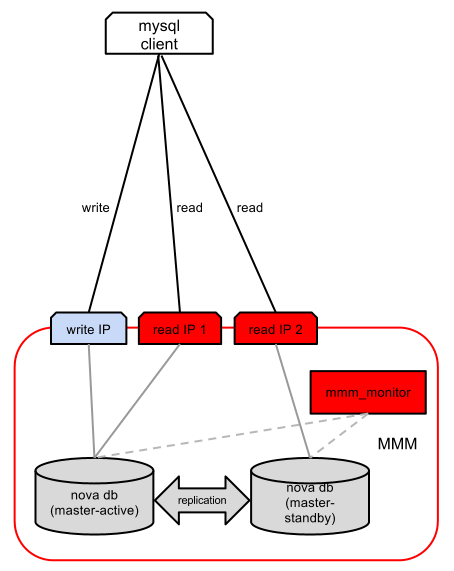

MMM

MultiMaster replication Manager employs a traditional master-slave mechanism for replication. It is based on a set of MySQL servers with at least two master replicas and a set of slaves plus a dedicated monitoring node. On top of this set of hosts, an IP address pool is configured that can be dynamically moved by MMM from host to host, depending on their availability. We have two types of these addresses:

- A "writer": Clients can write to the database by connecting to this IP (there can be only one writer address throughout the whole cluster).

- A "reader": Clients can read from the database by connecting to this IP (there can be a number of them to scale reads).

The monitor node checks the MySQL servers' availability and triggers transfer of the "reader" and "writer" IPs if there is a server failure. The check set is rather simple; it includes a test network availability, checks for presence of mysqld on the host, presence of the replication thread, and the size of the replication backlog. Load balancing reads between "reader" IPs is left up to the user (it can be done with HAProxy or round robin DNS, etc.).

[caption id="attachment_109312" align="aligncenter" width="363"] MMM architecture[/caption]

MMM architecture[/caption]

MMM relies on traditional replication, which is asynchronous. This means there is always a chance the replicas might not have caught up with the master when it failed. Currently replication is single-threaded, which in the multicore, high-volume transaction world is often not enough, especially when one deals with long-running write queries. These concerns are addressed in the upcoming MySQL version, which implements a number of features for binlog optimizations and HA.

As for OpenStack-specific tutorials regarding MySQL HA, Alessandro Tagliapietra presents an interesting approach (the post is OpenStack-specific) to ensuring MySQL availability using master-slave replication, plus Pacemaker with Percona's Pacemaker resource agent.

HA solutions for the message queue

Due to its nature, RabbitMQ data is very volatile. Since messaging is all about speed and amounts of data, all the messages are kept in RAM unless you define queues as "durable," which will make RabbitMQ write them to disk. This is actually supported by OpenStack with the setting rabbit_durable_queues=True in nova.conf. Even though the messages are written to disk and thus will survive a crash or restart of the RabbitMQ server, they should not be considered a real HA solution, since:

- RabbitMQ does not perform fsync to disk upon receipt of each message, so when a server crashes, there can still be messages residing in filesystem buffers and not actually on the disk. After a reboot they will be lost.

- RabbitMQ still resides on one node only.

RabbitMQ can be clustered and a clustered RabbitMQ is called a "broker." Clustering itself is more about scalability than high availability. Still it suffers from a severe flaw—all virtual hosts, exchanges, users, etc., are replicated except the message queues themselves. To address this, a mirrored queues feature has been implemented. Brokering plus queue mirroring should be combined to achieve full fault tolerance of RabbitMQ.

There is also a solution based on Pacemaker but it is considered obsolete in favor of the above.

It's still worth pointing out that none of the above clustering modes are supported directly by OpenStack; however, Mirantis has done some serious work in the field (more about this later on).

Mirantis deployment experience

At Mirantis we have deployed highly available MySQL using MMM (MultiMaster replication Manager) for a number of our clientscustomers. Although some developers have expressed concerns about MMM,there are complaints about this on the web, we saw have seen no major issues with MMM in our experience, and treat it as a 'just enough'"good enough" an acceptable solution. However, we do know that there are people who have issues with it and we are taking a look now at architectures based on WSREP's syncrhonous replication approach, as it by definition provides more data consistency and manageability, as well as simpler setup (e.g., Galera Cluster, XtraDB Cluster).

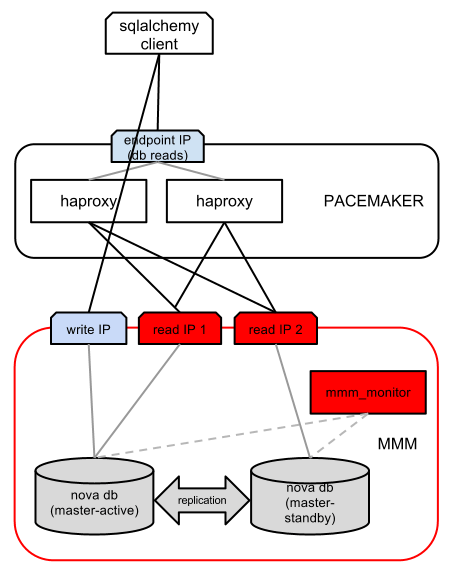

Below is an illustration of the setup we put together for a large OpenStack deployment:

Database HA is assured by MMM: master-master replication with one standby master (only the active one supporting writes, and both masters supporting reads151so we have one "writer" IP and two "reader" IPs). The mmm_monitor checks the availability of both masters and shuffles "reader" and "writer" IPs accordingly.

On top of MMM, HAproxy load balances read traffic between both "reader" IPs for better performance. Of course, one may also add a number of slaves with further "reader" IPs for scalability. While HAproxy is good at load balancing traffic, it does not provide high availability itself, so another instance of HAproxy is present and for both of them a resource is created in Pacemaker. So if one of the HAproxies fails, Pacemaker will handle moving the IP address from the dead "writer" to the other.

Since we can have only one "writer" IP, we do not need to load balance it and write requests go straight to it.

With this approach we can ensure scalability of write requests by adding more slaves to the DB farm plus load balancing with HAproxy and we also maintain high availability by using Pacemaker (to detect HAproxy fails) plus MMM (to detect db host fails).

As for RabbitMQ HA versus OpenStack, Mirantis has proposed a patch for nova with mirrored queues support. From the user's perspective it adds two new options to be put into nova.conf:

rabbit_ha_queues=True/False- to turn queue mirroring on.rabbit_addresses=["rabbit_host1","rabbit_host2"]- so users can specify a RabbitMQ HA clustered host pair.

Technically what happens is that x-ha-policy:all is added to each queue.declare call inside nova and the roundrobin logic of the cluster is connected. Setting up the RabbitMQ cluster itself is left to the user.

Further information

I've presented several options for ensuring high availability to the database and messaging system. Here is a reading list for further research on the subject.

http://wiki.openstack.org/HAforNovaDB: HA for the OpenStack db

http://wiki.openstack.org/RabbitmqHA: HA for the queue system

http://www.hastexo.com/blogs/florian/2012/03/21/high-availability-openstack: a post describing various aspects of OpenStack HA

http://docs.openstack.org/developer/nova/devref/rpc.html: how OpenStack messaging works

http://www.laurentluce.com/posts/openstack-nova-internals-of-instance-launching/: a nice walkthrough of the process of instance launching

https://lists.launchpad.net/openstack/pdfGiNwMEtUBJ.pdf: presentation on nova HA

http://openlife.cc/blogs/2011/may/different-ways-doing-ha-mysql/: the title explains everything ;-)

http://www.linuxjournal.com/article/10718: an article on MySQL replication

http://www.mysqlperformanceblog.com/2010/10/20/mysql-limitations-part-1-single-threaded-replication/: addresses replication performance problems

https://github.com/jayjanssen/Percona-Pacemaker-Resource-Agents/blob/master/doc/PRM-setup-guide.rst: an article on Percona Replication Manager

http://www.rabbitmq.com/clustering.html: RabbitMQ clustering

http://www.rabbitmq.com/ha.html: RabbitMQ mirrored queues