Guest Post: Offloading CPU data traffic in OpenStack cloud with iSCSI over RDMA

At Mellanox, we’ve recently introduced some plug-ins for OpenStack. In this post, we’ll talk about the background for those technologies and tell you how you can get started with the iSER plugin (iSCSI extensions for RDMA) for your OpenStack cloud.

Classically, data center computing relies on any of several types of communication between Compute and Storage servers, as compute servers read and write data from the storage servers constantly. Naturally, to get the most out of your server’s CPU performance, communication from the compute server to the storage server must have the lowest possible latency and highest possible bandwidth, so all those CPU cycles are in fact processing, not waiting.

For a whole host of reasons, iSCSI predominates as a transport between storage and compute, and applications that use iSCSI over TCP are processed by the CPU, so the data path for iSCSI, as well as protocols such as TCP, UDP, and NFS, all must wait in line with the other applications and system processes for their turn using the CPU. Not only does that choke up the network, it also uses system resources that could be used for executing the jobs faster. In a classic datacenter environment, where workloads and compute resources are fairly stable you can tune much of this, but it’s still an issue.

In a virtualized or cloud environment, this problem becomes more acute. When you can’t lock down the data channels, it’s really hard to predict when the CPU or vCPU is going to have to give up a chunk of processing power just to get data through I/O. Add the layering of the virtualized environment, and the CPU is spending a lot of time working on managing how the OS handles this traffic. This is true both for physical and virtual CPUs.

Mellanox ConnectX adapters bypass the operating system and CPU by using RDMA (Remote Direct Memory Access), allowing much more efficient data movement paths. iSER (iSCSI extensions for RDMA) capabilities are used to accelerate hypervisor traffic, including storage access, VM migration, and data and VM replication. The use of RDMA moves the data to the Mellanox ConnectX hardware, which provides zero-copy message transfers for SCSI packets, producing much faster performance, lower latency and access-time, and lower CPU overhead.

iSER can provide six times faster performance than traditional TCP/IP based iSCSI. This converges efforts that have been going on in both Ethernet and InfiniBand communities, respectively, and reduces the number of storage protocols a user must learn and maintain.

The RDMA bypass allows the data path to skip lines. Data is placed immediately upon receipt, without being subject to variable delays based on CPU load. This has three effects:

- There is no waiting, which means that the latency of transactions is incredibly low.

- Because there is no contention for resources, the latency is consistent.

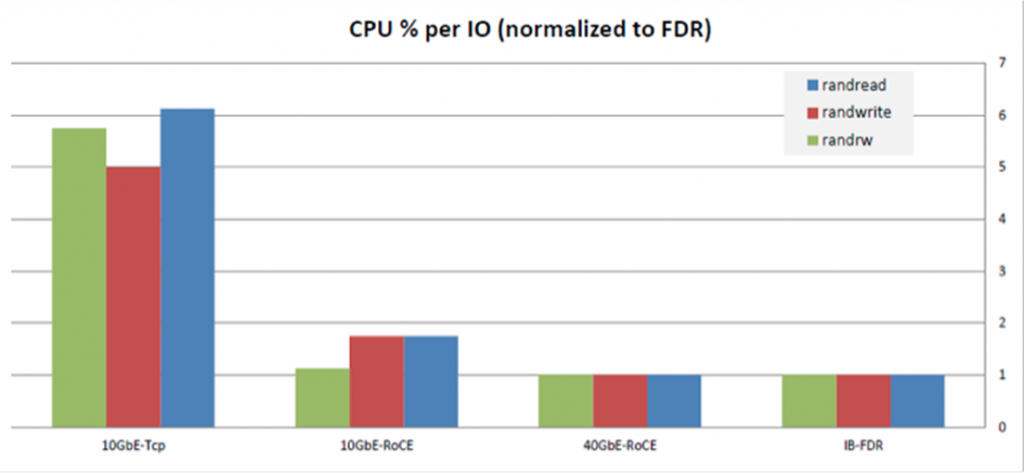

- By skipping the OS, using RDMA results in a large savings of CPU cycles.

With a more efficient system in place, those saved CPU cycles can be used to accelerate application performance.

In the general case, we did some tests that show when performing offload of the data to the hardware using the iSER protocol, the capacity of the link is utilized to the maximum of the PCIe limit.

Network performance is a significant constraint both for Data Center and cloud services; in the cloud context, there are many instances where you can’t perfectly predict where data is going to flow when, and you can run into bottlenecks when you least expect them.

Having experience in virtualized environments led us to apply these same lessons to OpenStack, and we built a plugin for Cinder. Most block storage use cases are extremely sensitive to storage latency, since block retrievals come in all shapes and sizes, again introducing bottlenecks.

To test this, we ran traffic to a RAM device (eliminating disk-side latency) and got ~1.3GBps over TCP Vs. ~5.5GBps over iSER, and much lower CPU overhead (as expected). What we did was to create a volume, attach the volume to a VM, detach it from the VM, and delete the volume.

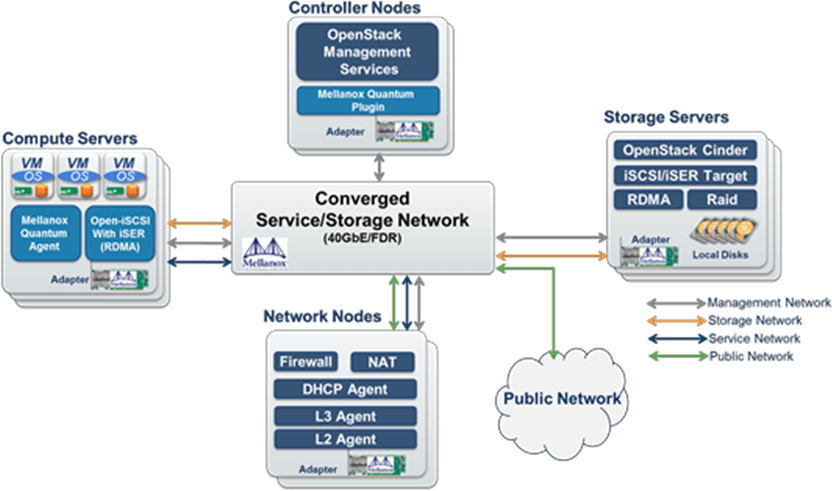

We also added this capability through a Quantum plug-in, which passes through embedded switch functionality through the adapter. Using SR-IOV virtualized functions, it allows hardware vNICs in each Virtual Machine vNIC to have its unique connectivity, security, and QoS attributes. Hardware vNICs can be mapped to the guest VMs either through para-virtualization (using a Tap device), or directly as a Virtual PCI device to the guest. In this fashion, you can hook your guest up directly to the higher throughput/lower latency of iSER, and keep your vCPUs cycles for crunching data instead of just handling data traffic.

References:

- Download the Mellanox OpenStack Cinder plugin files

- Configuring Cinder to use iSER on Mellanox ConnectX adapters

- Linux packages for Mellanox transports in various use cases via Mellanox OFED

For any questions contact openstack@mellanox.com

Eli Karpilovski is Cloud Market Development Manager at Mellanox.