Making sense of SDN with-and without-HW-based networking in OpenStack Cloud

There’s a tremendous amount of talk about the shift in the networking business from hardware-bound networking to SDN. I worry when I hear that SDN is the next ‘big data’ or ‘cloud’ trend. It makes me think that just as every application and infrastructure company is now offers ‘cloud’ and every data technology company is making things for ‘big data’, soon every networking company will be leading the charge into SDN.

In this post, I want to talk about how to apply SDN, so I think it makes sense to start with a definition: Software-Defined Networking (SDN) is an approach to networking in which control is decoupled from hardware and given to a software application called a controller.

At Mirantis, we’ve done a bunch of work in this space. This is not the place to go into all those details, as some of the technology we’ve helped to build is not released yet, and some of it is in production at customers who don’t want their stories told in public. One thing I should mention is that we’ve invested a lot in the open source side too, most meaningfully in OpenStack Equilibrium, a project where we are leading the implementation of LBaaS, aka Load Balancing as a Service.

Putting SDN to work

One way to think about life before SDN was “Hardware Defined Networking”: routers, firewalls, switches, NICs, etc., all imposed control on network traffic flows based on what they knew about the traffic. So to move beyond that, let’s look at two use cases for adding SDNs in the data center. The first relies on creating an overlay purely in software; and second addresses participation by switch and router elements

- Software overlay networks: These networks create tunnels which overlay on top of heterogenous hardware infrastructure. Tunneling protocols can be GRE, STT, CAPWAP, VxLan. Interfacing with hardware appliances for L3-L7 services usually require a bridge device. Network policy controls such as QOS are implemented in the software edge devices (Open vSwitch and similar). For OpenStack these types of SDN networks are provided by Nicira and Midokura.

- Mixed hardware and software networks: In this network type while creation and modification of the network is done by the software controller, infrastructure switches and routers understand and participate in the network flow. These network types can use a protocol that hardware devices can understand and participate in. Older network architectures used VLAN tags, newer ones use either the OpenFlow standard or eventually VxLan standard. Network policy controls such as QOS can be implemented either at the edge or in the hardware network fabric, given that the hardware understand the virtual network scheme. Two providers in this camp are BigSwitch and Cisco. Of course, for any implementation that is not a green field, this ability to leverage existing resources into the networking mix is quite attractive.

Network Service Elements in OpenStack

Whether a network is deployed in software alone, or a combined software-hardware approach, there a some important network service elements to manage. These network service elements can be either hardware boxes or virtual appliances; each offers a different SDN integration strategy that I will define below.

Virtual Service Insertion

Virtual appliances usually ride on top of the cloud SDN infrastructure; they are isolated from the underlying implementation of the cloud itself. These types of services are usually managed by the cloud infrastructure via an API; being purely software, they are instantiated on demand. Examples include the Quantum L3 router in Folsom and CloudPipe in Essex. Mirantis is working with the OpenStack community on standardizing the service insertion APIs and logic for Grizzly; in the meantime, Mirantis has architected the service insertion solution for the Folsom code base.

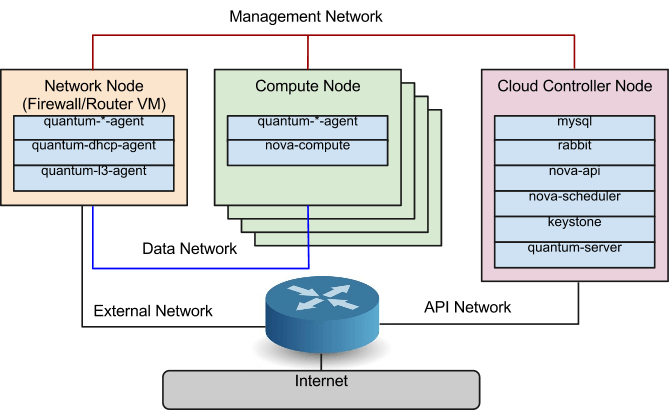

This approach involves extending the L3 APIs offered by the L3 agent used in Quantum plugins such as OVS, and replacing the agent service code with equivalent functionality offered by the virtual appliance. This first approach also involves one-for-one replacement of the Network Node in Quantum with a VM. This VM would offer services to multiple cloud tenants through linux namespaces providing tenant segregation. This approach is demonstrated in Figure 1, below.

[caption align="aligncenter" width="670"] Figure 1. Integrating Network Appliance VM as a Network Node[/caption]

Figure 1. Integrating Network Appliance VM as a Network Node[/caption]

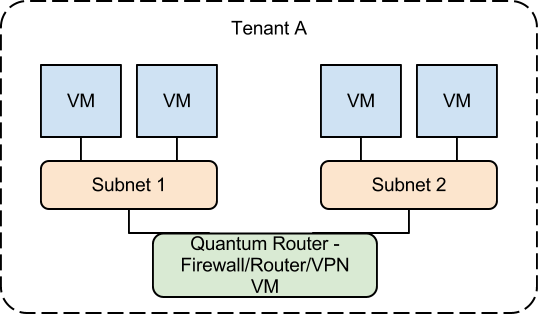

A second approach involves creating on demand network elements for each tenant using nova compute guests; this is done when tenant evokes the appropriate API to create an element such as router, firewall, or VPN. This approach is demonstrated in the Figure 2.

[caption align="aligncenter" width="670"] Figure 2. Integrating Advanced Network VM as a Quantum router[/caption]

Figure 2. Integrating Advanced Network VM as a Quantum router[/caption]

What this means is this: once you can create discrete, standalone virtual network service elements on-demand, it helps make it much easier to create multi-tenant network services in the SDN infrastructure.

Hardware-based Service Insertion into OpenStack

Now that we’ve seen the straightforward case of inserting virtual network service elements, let’s look at adding a hardware appliance to an SDN. This is something of a challenge. First of all, hardware appliances usually do not understand the virtual network topology of the software overlay networks and require a bridge device. The bridge device can be a dedicated general purpose compute host or a VM. The bridge flattens out the virtual network, presenting a plain segment to the hardware appliance, which can either: offer single tenant services and be provisioned on the fly from a pool; or, can offer multi-tenant services, but without network segregation on its network segment.

For mixed SDNs inserting a hardware network appliance is easier. For example, older style VLAN infrastructure hardware can directly participate in the network scheme, so that appliances which offer internal virtualization capabilities can be “split” between multiple tenants while still offering traffic and control segregation. We built out a deployment using this strategy for one of our clients, where we integrated the Juniper SSL VPN into each discrete tenant network.

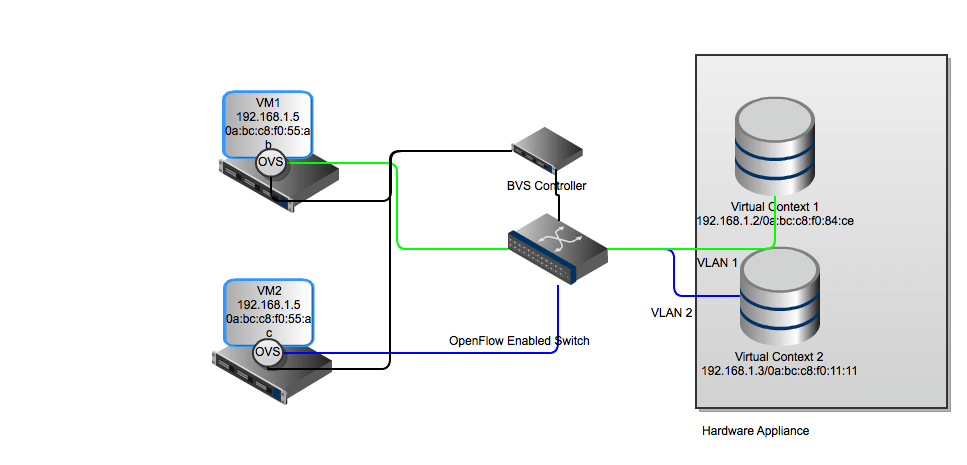

For newer OpenFlow networks, Mirantis has worked with BigSwitch in developing an approach where a particular flow will get translated into a VLAN by using a OpenFlow compatible switch operating in the mixed OpenFlow/Legacy mode, such as Arista 7050. In this way, the HW appliance can internally segregate network traffic via VLAN tags, and partition its services on a tenant-by-tenant basis.[caption align="aligncenter" width="670"] Figure 3. OpenFlow/Vlan Integration[/caption]

Figure 3. OpenFlow/Vlan Integration[/caption]

I want to close with an interesting edge case: VxLAN, the tunneling protocol developed by Cisco and VMware originally for use in VMware environments. Currently, VxLAN it is primarily available as a part the of Cisco Nexus 1000V virtual switch product line. However both Cisco and other hardware vendors are working on integrating VxLAN into their hardware switch line, with the stated goal of offering mixed mode SDNs (i.e., combining both software- and hardware-originated services. As it turns out, Arista has already released its 7150, capable of translating VLAN into VxLAN, and allowing the same ability to insert HW-appliance-originated services and still preserving multi-tenant segregation. Arista currently does not have an Quantum controller, however developing one would not be a difficult exercise.

One final thought: I’ve seen some discussions of SDN as a choice between hardware-controlled network services and SDN network services. As OpenStack capabilities have improved, this oversimplification is no longer a constraint.

References:

Arista: http://www.aristanetworks.com/en/solutions/network-virtualization

Cisco: https://communities.cisco.com/docs/DOC-31704

Nicira: http://nicira.com/sites/default/files/docs/NVP%20-%20The%20Network%20for%20OpenStack.pdf

BigSwitch: http://www.bigswitch.com/products/big-virtual-switch

OpenStack: http://wiki.openstack.org/Quantum/ServiceInsertion

https://blueprints.launchpad.net/quantum/+spec/services-insertion-wrapper