Placement control and multi-tenancy isolation with OpenStack Cloud: Bare Metal Provisioning, Part 2

In a previous post, we introduced the bare-metal uses cases for OpenStack Cloud, using its capabilities. Here, we're going to talk about how you can apply some of these approaches to a scenario mixing virtualization with isolation of key components.

Isolation requirements are pretty common for OpenStack deployments. And in fact, one can just say: "Without proper resource isolation you can wave goodbye to the public cloud". OpenStack tries to fulfill this need in a number of ways. This involves (among many other things):

- GUI & API authentication with Keystone

- private images in Glance

- security groups

However, if we go under the hood of OpenStack, we will see a bunch of well known open source components, such as KVM, iptables, bridges, iSCSI shares. How does OpenStack treat these components in terms of security? I could say that it does hardly anything here. It is up to the sysadmin to go to each compute node and harden the underlying components on his own.

At Mirantis, one OpenStack deployment we dealt with had especially heavy security requirements. There was a need for all the systems to comply with several governmental standards involved in processing sensitive data. Still we had to provide multitenancy. To observe the standards we decided that for "sensitive" tenants, isolated compute nodes with a hardened config should be provided.

The component responsible for distribution of the instances across OpenStack cluster is nova-scheduler. Its most sophisticated scheduler type, called FilterScheduler allows to enforce many policies on instance placement based on "filters". For a given user request to spawn an instance, filters determine a set of compute nodes capable of running it. There are a number of filters already provided with the default nova-scheduler installation (they are listed here). However none of them fully satisfied our requirements, so we decided to implement our own, and called it "PlacementFilter".

The main goal of the PlacementFilter is to "reserve" a whole compute node only for one tenant's instances, thus making them isolated from other tenants' instances on the hardware level. Upon tenant creation it can be specified if it is isolated from others or not (default). For isolated tenants only designated compute-nodes should be used for VM instances provisioning. We define and assign these nodes to specific tenants manually, by creating a number of host aggregates. In short – host aggregates is a way to group compute-nodes with similar capabilities/purpose. The goal of the PlacementFilter is to pick a proper aggregate (set of compute nodes) for a given tenant. Usual (non-isolated) tenants will be using “shared” compute-nodes for VMs provisioning. In this deployment we were using OpenStack to also provision baremetal nodes. Bare-metal nodes are isolated by their nature so there’s no need to designate them to pool of isolated nodes for isolated tenants. (In fact, this post builds a bit on one of my previous posts about bare-metal provisioning)

Solution architecture

During the initial cloud configuration, all servers dedicated for compute should be split into 3 pools:

- servers for multi-tenant VMs

- servers for the single-tenant VMs

- servers for bare-metal provisioning

Such grouping is required to introduce two types of tenants: “isolated tenant” and “common tenant". For "isolated tenants" aggregates are used to create dedicated sets of compute nodes. The aggregates are later taken into account in the scheduling phase by the PlacementFilter.

The PlacementFilter has two missions:

- schedule VM on a compute node dedicated to the specific tenant or on one of default compute nodes if tenant is non-isolated

- schedule VM on a bare-metal host if a bare-metal instance was requested (no aggregate is required here, as bare-metal instance is isolated from other instances by nature – on the hardware level)

Placement filter passes only bare-metal hosts if a ‘bare_metal’ value was given for ‘compute_type’ parameter in scheduler_hints.

If a non bare-metal instance is requested – filter searches aggregate for the project this instance belongs to, and passes only hosts from its aggregate. If aggregate for project is not found, then a host from the default aggregate is chosen.

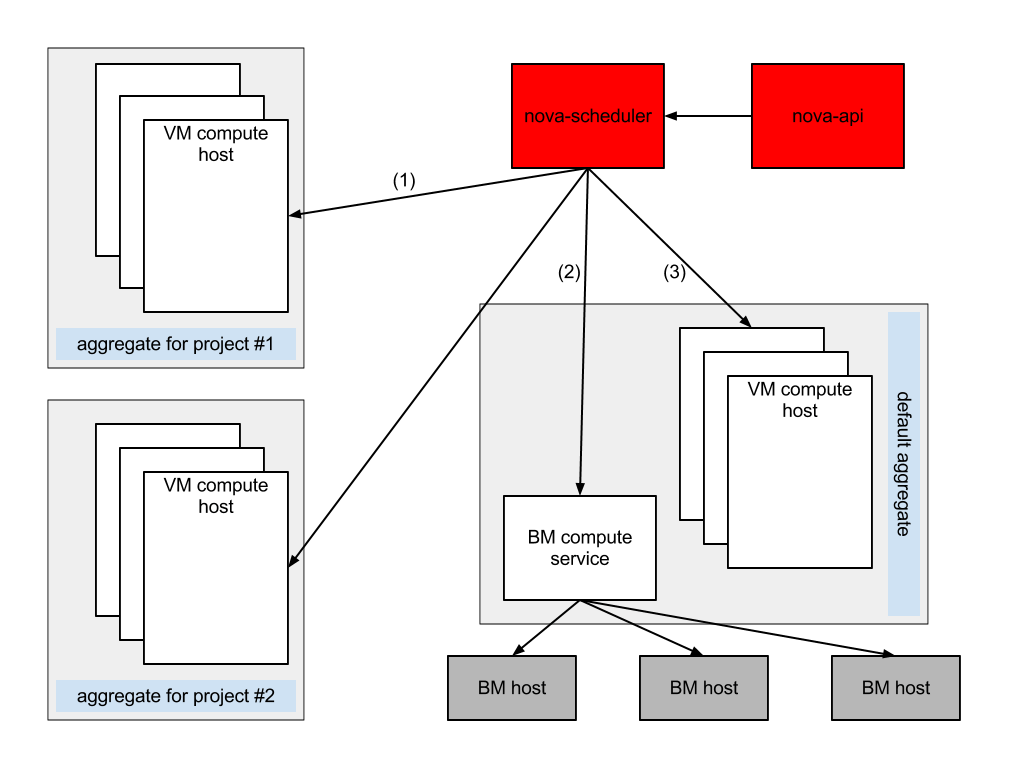

The following diagram illustrates how the PlacementFilter works for both bare-metal and virtual instances:

(1) A member of project#1 requests an instance on his own isolated set of compute nodes. The instance lands within his dedicated host aggregate.

(2) A member of project#1 requests a bare-metal instance. This time no aggregate is needed as bare-metal nodes are by nature isolated on the hardware level, so the bare-metal node is taken from the general pool.

(3) Instances of tenants not assigned any host aggregate, land in the default "public" aggregate, where compute nodes can be shared among the tenant instances.

PlacementFilter setup

This is the procedure we follow to implement instance placement control:

- create a default aggregate for non-isolated instances and add compute-nodes to it:

nova aggregate-create default nova

nova aggregate-add-host 1 compute-1 - add a host where <bare-metal driver> runs to the default aggregate.

- install placement filter from the packages or source code. Add the following flags to nova.conf file:

--scheduler_driver=nova.scheduler.filter_scheduler.FilterScheduler

--scheduler_available_filters=placement_filter.PlacementFilter

--scheduler_default_filters=PlacementFilter - create an isolated tenant:

keystone tenant-create --name <project_name>

- create a dedicated aggregate for this tenant:

nova aggregate-create <aggregate_name> nova

nova aggregate-set-metadata <aggregate_id> project_id=<tenant_id> - add hosts to the dedicated aggregate:

nova aggregate-add-host <aggregate_id> <host_name>

- spawn instance:

nova boot --image <image_id> --flavor <flavor_id> <instance_name>

(instance will be spawned on one of the hosts dedicated for current ten)

- spawn bare-metal instance:

nova boot --image <image_id> --flavor <flavor_id> --hint compute_type=bare_metal <instance_name>

Summary

With the advent of FilterScheduler, implementing custom scheduling policies has become quite simple. Filter organization in OpenStack makes it formally as simple as overriding a single function called "host_passes". However, the design of the filter itself can become quite complex and is left to the fantasiesof sysadmins/devs (ha!). As for host aggregates, until recently there was no filter which would take them into account (that's why we implemented PlacementFilter). However, recently (in August 2012) a new filter appeared, called AggregateInstanceExtraSpecsFilter which seems to do similar job.